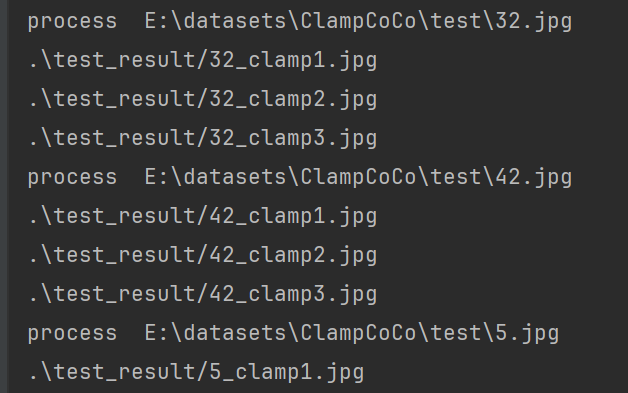

昨天有群友,在群里提到了这个需求,进行实现一下

也就是

一张图片会检测到不同类别的实例,实现的效果就是,将同一类别的输出在一张图片上

例,一张图片检测到 3种实例,输出 3张图片,每一张图上只可视化一种类别

涉及到的文件是,mmdet/visualization/local_visualizer.py

主要逻辑

def add_datasample():

# ...

if draw_pred and data_sample is not None:

pred_img_data = image

if 'pred_instances' in data_sample:

pred_instances = data_sample.pred_instances

pred_instances = pred_instances[

pred_instances.scores > pred_score_thr]

pred_img_data = self._draw_instances(image, pred_instances,

classes, palette)

# ...

self.set_image(drawn_img)

# 写出

if out_file is not None:

mmcv.imwrite(drawn_img[..., ::-1], out_file)

def _draw_instances():

# ...

# 绘制 检查框 和 标签

if 'bboxes' in instances and instances.bboxes.sum() > 0:

self.draw_bboxes()

for i, (pos, label) in enumerate(zip(positions, labels)):

self.draw_text()

# 绘制 实例 mask

if 'masks' in instances:

# ...

self.draw_polygons(polygons, edge_colors='w', alpha=self.alpha)

self.draw_binary_masks(masks, colors=colors, alphas=self.alpha)

# ...修改 可以在 _draw_instances() 里面,也可以在 add_datasample() 里面完成

-

在 add_datasample() 里面修改 需要重新拿到 pred_instances,然后进行分析,再绘制,写出

-

在 _draw_instances() 里面修改 进一步 传入的pre_instances ,然后返回 绘制后的 不同类别可视化图像数据 列表,在_add_datasample() 写出

这里选择的方式是接近第二种,

根据 label 建立不同的 index 数组,得到每一个类别要绘制的 内容,然后调用add_datasample() 进行绘制,不对_draw_instances()

修改 mmdet/visualization/local_visualizer.py

@master_only

def add_datasample(

self,

name: str,

image: np.ndarray,

data_sample: Optional['DetDataSample'] = None,

draw_gt: bool = True,

draw_pred: bool = True,

show: bool = False,

wait_time: float = 0,

# TODO: Supported in mmengine's Viusalizer.

out_file: Optional[str] = None,

pred_score_thr: float = 0.3,

step: int = 0) -> None:

image = image.clip(0, 255).astype(np.uint8)

classes = self.dataset_meta.get('classes', None)

palette = self.dataset_meta.get('palette', None)

gt_img_data = None

pred_img_data = None

if data_sample is not None:

data_sample = data_sample.cpu()

if draw_gt and data_sample is not None:

gt_img_data = image

if 'gt_instances' in data_sample:

gt_img_data = self._draw_instances(image,

data_sample.gt_instances,

classes, palette)

if 'gt_sem_seg' in data_sample:

gt_img_data = self._draw_sem_seg(gt_img_data,

data_sample.gt_sem_seg,

classes, palette)

if 'gt_panoptic_seg' in data_sample:

assert classes is not None, 'class information is ' \

'not provided when ' \

'visualizing panoptic ' \

'segmentation results.'

gt_img_data = self._draw_panoptic_seg(

gt_img_data, data_sample.gt_panoptic_seg, classes, palette)

########

draw_image_for_each_class = True

########

if draw_pred and data_sample is not None:

pred_img_data = image

if 'pred_instances' in data_sample:

pred_instances = data_sample.pred_instances

pred_instances = pred_instances[

pred_instances.scores > pred_score_thr]

pred_img_data = self._draw_instances(image, pred_instances,

classes, palette)

################

each_class_instances_image_list = self._draw_each_class_instances(image, pred_instances,

classes, palette)

################

if 'pred_sem_seg' in data_sample:

pred_img_data = self._draw_sem_seg(pred_img_data,

data_sample.pred_sem_seg,

classes, palette)

if 'pred_panoptic_seg' in data_sample:

assert classes is not None, 'class information is ' \

'not provided when ' \

'visualizing panoptic ' \

'segmentation results.'

pred_img_data = self._draw_panoptic_seg(

pred_img_data, data_sample.pred_panoptic_seg.numpy(),

classes, palette)

if gt_img_data is not None and pred_img_data is not None:

drawn_img = np.concatenate((gt_img_data, pred_img_data), axis=1)

elif gt_img_data is not None:

drawn_img = gt_img_data

elif pred_img_data is not None:

drawn_img = pred_img_data

else:

# Display the original image directly if nothing is drawn.

drawn_img = image

# It is convenient for users to obtain the drawn image.

# For example, the user wants to obtain the drawn image and

# save it as a video during video inference.

self.set_image(drawn_img)

if show:

self.show(drawn_img, win_name=name, wait_time=wait_time)

#########

if draw_image_for_each_class and show:

for each_class_image in each_class_instances_image_list:

self.show(each_class_image, win_name=name, wait_time=wait_time)

#########

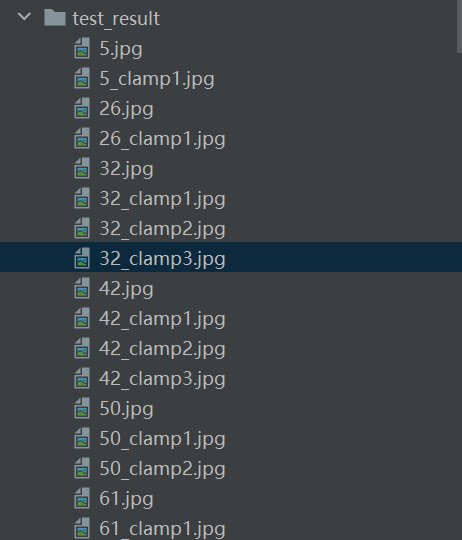

if out_file is not None:

mmcv.imwrite(drawn_img[..., ::-1], out_file)

##############

if draw_image_for_each_class:

for i,each_class_image in enumerate(each_class_instances_image_list):

out_file_ = out_file

basename = os.path.basename(out_file_)

out_file_ = os.path.split(out_file_)[0] + '/'+os.path.splitext(basename)[0] + "_" + classes[i] + \

os.path.splitext(basename)[-1]

print(out_file_)

mmcv.imwrite(each_class_image[..., ::-1],out_file_)

##############

else:

self.add_image(name, drawn_img, step)添加、修改的代码 一共有 四处,用

#############

code

#############

进行了包括,建议不要直接替换,可能存在版本不一致,出现其他问题,对比进行添加修改

添加 _draw_each_class_instances()

==函数位置放在 _draw_instances() 下面==

# only for instance segmentation

def _draw_each_class_instances(self, image: np.ndarray, instances: ['InstanceData'],

classes: Optional[List[str]],

palette: Optional[List[tuple]]) -> [np.ndarray]:

each_class_instance_image_list = []

label_num = 0

if 'labels' in instances and instances.labels.size()[0] > 0:

label_num = instances.labels.size()[0]

if label_num == 0:

return [image]

labels = instances.labels.detach().numpy()

for label in sorted(list(set(labels))):

index_list = labels == label

instances_for_label = InstanceData()

instances_for_label['bboxes'] = instances['bboxes'][torch.tensor(index_list)]

instances_for_label['labels'] = instances['labels'][torch.tensor(index_list)]

instances_for_label['masks'] = instances['masks'][torch.tensor(index_list)]

instances_for_label['scores'] = instances['scores'][torch.tensor(index_list)]

# instances_for_label['metainfo'] = instances['metainfo']

each_class_instance_image = self._draw_instances(image,instances_for_label, classes, palette)

each_class_instance_image_list.append(each_class_instance_image)

return each_class_instance_image_list